What is it?

GPT-3 is a machine learning language model created by OpenAI, a leader in artificial intelligence. In short, it is a system that has consumed enough text (nearly a trillion words) that it is able to make sense of text, and output text in a way that appears human-like. I use 'text' here specifically, as GPT-3 itself has no intelligence –it simply knows how to predict the next word (called a token) in a sentence, paragraph, or text block. It does this exceedingly well.

As an analogy you can think of GPT-3 like a freshly hired intern, who is well read, opinionated, and has a poor short-term memory. It is clever and offers fresh perspectives on how to solve problems, yet you don't really trust it to run your company or talk directly to customers.

GPT-3 works by taking a section of input text, and predicting the next section of text that should follow directly after. When hearing this people often compare it to autocorrect. The biggest difference being creativity. When you use GPT-3, you supply your input, and a few options. The most important of which is called temperature, the measure of how creative the outputs will be. Computers are not designed to be creative, so effectively this is option gives GPT-3 the freedom to questionable choices.

If you read everything there is to read, and stored how likely words are to appear together, in context, then you should be able to 'guess' how a sentence or story will sound. This is hard to conceptualize because we as humans don't process information like this.

GPT-3 is like a freshly-hired intern, who is well read, opinionated, and has a poor short-term memory

More specifically GPT-3 stands for 'Generative Pretrained Transformer 3', with transformer representing a type of machine learning model that deals with sequential data.

Why is fancy autocorrect interesting?

Well, it turns out that given enough input data, an AI like GPT-3 is able to repeatably perform non-trivial tasks. If you supply it well-structured input text you can get GPT-3 to respond very naturally, often appearing as if a person was generating the answers. This makes GPT-3 well suited for tasks such as creative writing, summarization, classification, and transactional messaging.

How can I use it for my business?

Access

Right now GPT-3 is only available through OpenAI's API product offering. They will likely never release the full model to the public as they did with previous versions (GPT-2). The OpenAI API is the companies first public product and is still relatively early in its development cycle. As of June 2020, OpenAI has opened up a waitlist to be invited to join the beta. They claims 10s of thousands of people have asked to be invited, so if you have a special use-case you will likely have to email a an OpenAI employee with a specific request. They have been slowly on-boarding people over the course of the last month.

Join the waitlist

Product offerings

There are two types of API endpoints that companies can access, namely completions and search.

Completions: The headline product, a completion lets GPT-3 take in an input prompt (which we will cover in detail below), and complete that to return a result.

Search: GPT-3 is very competent at parsing natural language inputs, making it ideal for building search tools.

Models: OpenAI currently offers 4 models (ada, babbage, curie, davinci), each of a different size. The flagship model is 'davinci', representing the 175 billion parameters touted in the media. Model size directly correlates to how fast the API calls are handled, so for time-sensitive requests davinci will likely be too slow. See 'Speed' below for more.

Fine-tuning: OpenAI will offer to train a model specifically for you, based on a dataset that you supply. Fine-tuning will be ideal for companies to produce extremely accurate outputs for tasks with more than a few dozen input prompts, or that require a very specific output structure. Read more in Fine-tuning below.

Playground

Beyond just making calls using code to the API, OpenAI also offers a user interface called the playground. This lets developers quickly test ideas and refine input prompts before committing to create an application. It is easy to foresee that some variant of this playground could be made in a public-facing manner for anyone to get help with smaller problems.

Pricing

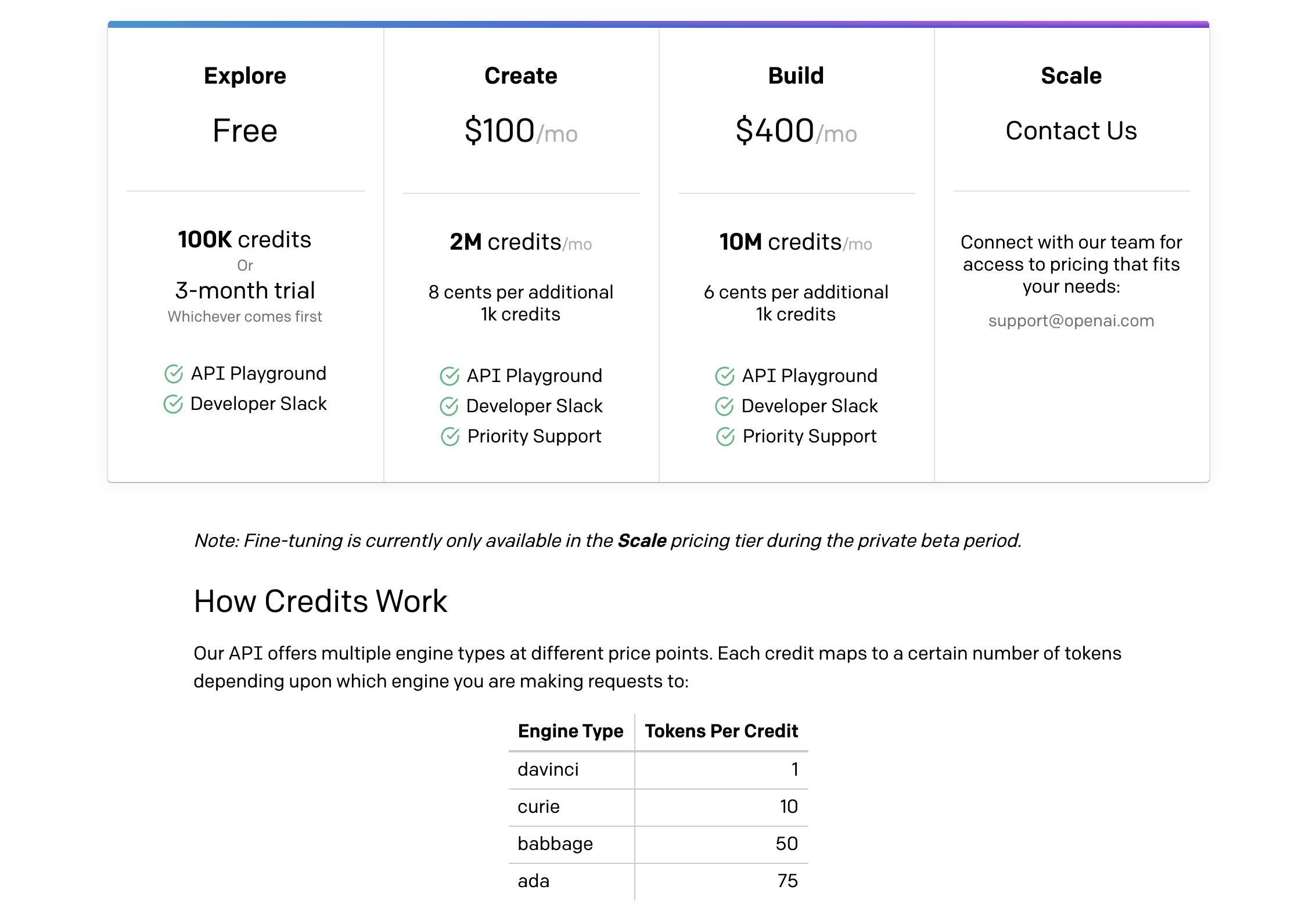

This API is currently in beta testing stage and was free to use until October. Starting 10/1 the following prices are in place:

While there is a free 'Explore' tier offered, it exists more as an interest builder than a useful allotment of tokens. It is quite easy to use up 100k tokens in an afternoon. Each engine varies greatly in size, and this variance is reflected in the 'token per credit system'. The smaller models are significantly more economical to run, though they will lack the serendipity and creativity of the largest Davinci engine.

Assuming 1,000 tokens used per request (prompt+output) a subscriber on the create tier would be allowed to use:

~$0.05/call for davinci (2,000)

~$0.005/call for curie (20,000)

~$0.001/call for babbage (100,000)

~$0.0007/call for ada (150,000)

It is important to understand that GPT-3 has no direct competition for creative output, meaning they can easily price the API as they wish going forward.

Limitations and Warnings

Stability

The OpenAI API is very much still a beta product, and has already had a few periods of unplanned downtime since I have used it. It is not yet suitable for critical business use cases. The OpenAI team has been communicative and very fast to respond to these outages, so I have no doubt they will be ironed out before a general release happens.

Speed

In my usage the latency of the API has been poor to OK. It is certainly one of the slowest APIs I have recently used. This is likely one of the main areas OpenAI will need to improve on before going live to a large audience. Serving a model the size of davinci (~350gb) efficiently is a non-trivial problem!

Content Moderation

As it stands API calls are made to the 'raw model'. This means that the outputs are unfiltered and may contain explicit, hateful, or racist content. 'davinci' was trained using Common Crawl, a service that records the content of web pages -including reddit, forums, and other

OpenAI is upfront with this and clearly states that applications should not let the user interact directly with the raw model. They currently require applications to go through an approval process before going into production.

Limited Inputs & Outputs

Currently the OpenAI API only allows for an input prompt to be 1000 tokens long (or around 500 words). This means that you must fit all of the context you are supplying in that prompt, quite a challenge.

Should I build a GPT-3-based company?

tl;dr – Maybe. There are some specific concerns to be aware of though.

Almost everyone I have talked to who plays with GPT-3 has that ah-ha! moment where they think of a great project to use it on. The play

You are taking a significant platform risk.

- OpenAI owns the API and they are free to do whatever they want with it, regardless of how that impacts your business.

- GPT-3 has no direct competitors (yet). This gives OpenAI incredible pricing power, access control, and the ability to add/enforce policies.

- Your service is tied to GPT-3 working correctly. GPT-3 is a very large ML model, that requires tangible compute resources to operate, which means it has the potential to run into scaling issues. (as alerady seen during the beta period)

- The speed of your application will be directly dependent on the OpenAI API.

Differentiation is hard

- GPT-3 is a few-shot model, therefore it is relatively trivial to reverse-engineer an input prompt. (you can read more in the scientific paper)

- Be careful that your app itself provides value, and doesn't simply let the OpenAI API do all the 'real work'.

Garbage in >> Garbage out

In working with GPT-3 one thing becomes very clear, the inputs you feed it matter –a lot! As a few-shot model, it is extrapolating what it thinks the right response is exclusively from the up to 1000 tokens of input you provide.

Where do language models go from here?

This OpenAI is still an early implementation of AI. As compute power and algorithm optimization continue to increase we will be able to effectively train much larger models. Based on estimates, GPT-3 cost roughly $4.6M worth of compute time to train.

GPT-2: 1.5 billion parameters

GPT-3: 175 billion parameters

GPT-4: ???

Human brain: 100 trillion synapses (analygous to parameter)