The hype around GPT-3 right now is frothy. It feels like a 'game-changer' to many and the potential it unlocks is stated at every turn. But should you really build (or invest in) a company based on GPT-3? Note: these are solely my opinions. I have used GPT-3 quite extensively now, for a number of different tasks and to build demos.

My take: Not yet. (well, maybe)

Let's back up for a second. Here is what we know about GPT-3.

- It is a huge language model (350gb, 175B parameters)

- It has a 2048 token (~word) attention span, with a maximum output of 512 tokens

- It is in limited, invite-only beta right now. (the slack group is ~1k total members)

- It is run in the Azure cloud and you can only access it via their API.

- When doing complex tasks it is relatively slow to use.

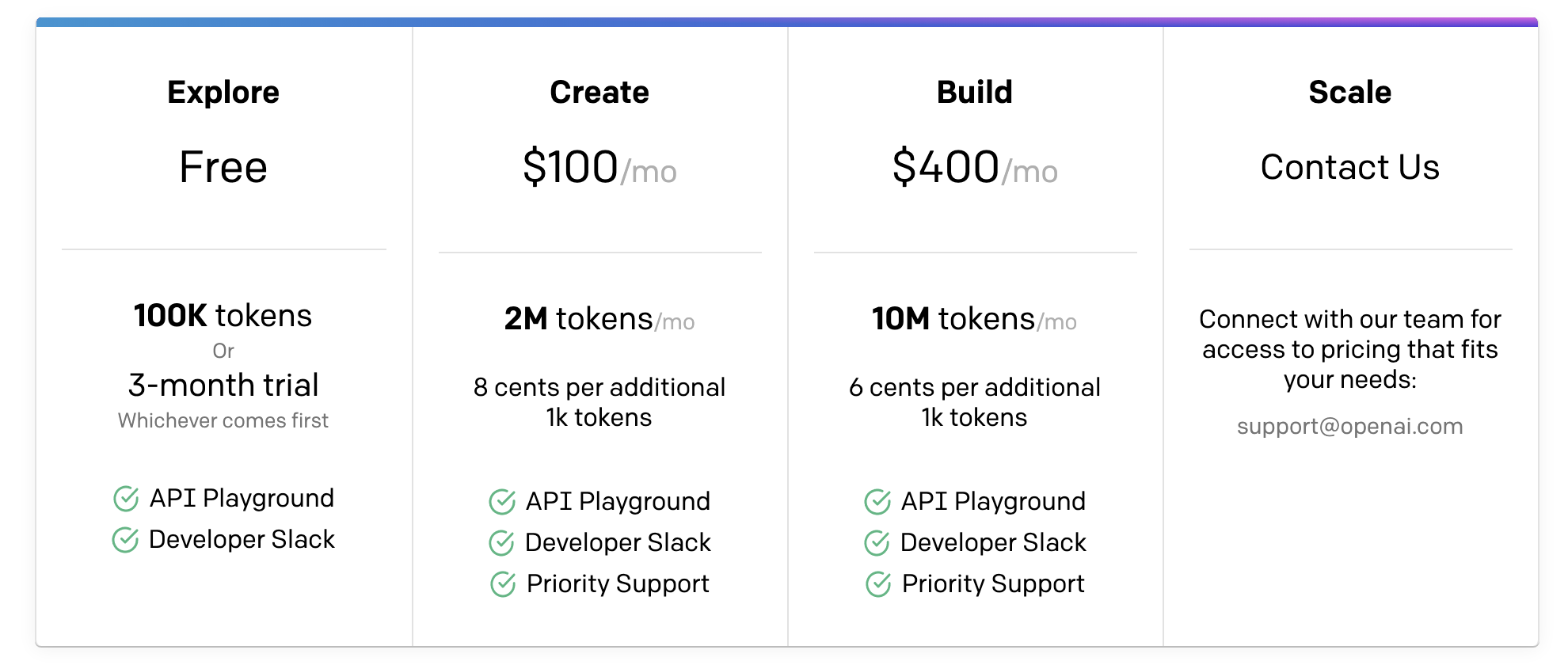

- It is relatively expensive (~2-16¢ per API call) *Edit: see chart below

- In order to use it in production you need to go through a review process and actively limit toxic outputs.

That all sounds a little scary, right? It should –these are non-trivial platform risks that would likely be critical paths for a GPT-3 based business. Are there other competitors to it? Sure. But none match the ease of use, and general quality of output that the huge 'davinci' engine returns.

Risks

You are at their mercy for pricing

Even if the initial pricing is cheap to start with, without immediate competition they have serious pricing-power. You will be a price taker. Good luck with your chat bot though (sorry).

**Edit 9/1/20: We now have an idea of how expensive API calls will be. In short it is fairly expensive for an API, costing as much as $0.16 per call. Practically speaking, this means that most applications using GPT-3 will need to bill users based on usage rather than a monthly subscription price. I don't believe this price point is unfair, but does limit the practical usage of the API. In the personas demo I made, each page load requires 5 api calls (averaging ~1200 tokens each), then another n calls for chat messages. So the rough math looks like 6,000 + 500(n) tokens per generation. I can safely say that just in playing with GPT-3 I have used millions of tokens. Ouch.

You are at their mercy for latency

GPT-3 is not a tiny little model. It will take some time for results to be returned. With the caveat of it is just in beta testing the model does already show slowdowns under high traffic times (as mentioned in the slack group). This means that the speed might be different in the middle of the day vs at night, or that traffic spikes could be impactful. While smaller prompts for chat have worked quickly in my testing, complicated prompts that are generating written prose take much longer. This lead to problems because....

The models attention span is short.

You need to be able to perform all of your work in roughly 2000 words. If you cannot do that you will need to add more complex execution methods such as context-stuffing to help GPT-3 to retain some understanding of what you are trying to accomplish across multiple API calls. This means that you will be making calls in serial to the API, considerably slowing your app down. In a demo I built, I make 5 serial calls to GPT-3 and it takes ~50-80 seconds for the main process to complete. Ouch. I might be able to shave a little time off of this, but not much. That is a long time to wait for a 3rd party API to return. (I remain hopeful the execution speed will get much faster by the end of the beta period!)

What does this all mean? It means you have to understand what you can reasonably create based on how long operations take.

Consider an app that is trying to summarize a long input of text (book, article) using basic context-stuffing. You can get more complicated with this, but bear with for a moment.

You start with 2048 total tokens. From that you need to remove:

- At least one sentence to set initial context (assume 48 tokens for simplicity)

- 0-512 tokens to recap previously summarized context

- 0-512 tokens for output

That leaves ~1000-1800 new tokens that can be summarized per call. This equates to roughly 2 - 4 written pages or 00:07:30 - 00:13:50 of transcribed audio.

Meaning, you can summarize a book with roughly 4:1 - 10:1 compression in one pass in (pages/4)*5s. I am being generous with the completion time, but a 100 page book would take 00:02:05, and a 500-pager would be over 10 minutes. That firmly puts long-form summarization in a 'we will email you when your task is done' category.

**Edit 9/1/20: In the recently published pricing, both the input prompt text and output text count against the charged token count. This makes the use-case of summarization as described above exceedingly expensive. By some napkin maths this means you are looking at a few dollars to summarize a podcast or short book. ~$100 to summarize a Harry Potter book.

Toxic outputs

I don't want to dwell too much on this as I know that OpenAI is actively working on making this a great user experience. That said, right now the early content filter they have in place just isn't very good. 'Toxic' output is just incredibly hard to characterize as context and nuance matters. I was writing movie scenes and it flagged nearly every output as toxic. I am very hopeful they improve on this quickly, but for now I would recommend every company looking to use GPT-3 in user-facing apps needs to implement their own filters. Which is a downer as it significantly reduces the it just works nature of the OpenAI API.

Everyone has the same engines

As it stands right now, everyone has access to the same four pre-trained engines: ada, babbage, curie, and davinci (ranging from small to large). This means that both you and your competitor will have the ability to get the same information back from the model. All you have the power to modify is the input prompt, and what you do with the outputs. Simply put, the value of your business will not be built using a commodity (the API), it will be built on all the other surrounding processes. For example, there have been many designer or no-code plugins demoed. I promise there is a lot of work happening in the background to show you the end results that have nothing to do with GPT-3. These processes are actually what will make your company or product special, rather than the fact it is 'powered by OpenAI'.

Benefits

It is seriously awesome

I can't stress this enough. The OpenAI API is just a great piece of engineering all around and I am very grateful that so many worked hard to produce it 👏. It has sparked the interest of so many people to care about AI and in a way is the reason this project (Honest AI) was started now.

It is incredibly easy to use.

Using GPT-3 for simple tasks is about as easy as writing your first 'hello world'. This is part of the reason that it feels like magic and has allowed so many people to build weekend demos. As it is a transformer, the input you feed it determines the output. This means that instead of engineering code, you have to creatively come up with input prompts. It is really amazing how small differences in prompt setup have material impact on how well the API accomplishes your desired task. This means that creativity is highly valuable when working with GPT-3.

The results are consistently great

The largest engine (davinci) is the first language model that I have ever been really blown away by. The results it gives are truly amazing. It is creative in ways that most people aren't. It forms relationships between objects, people, themes, and narratives that are enjoyable to work with. It also works very well for straight forward NLP tasks, such as picking out characteristics from an input sentence.

They allow for fine-tuning

OpenAI has already started giving people access to fine-tune specific engines for more accurate results. This has the potential to drastically improve response quality and latency. It is worth noting that they do not allow for fine-tuning of the largest 'davinci' model, so it will be important to have sufficient input data to allow for the smaller models to make accurate predictions. There is an additional waitlist for this, even for the limited number of people that already have beta access. It will be many months before this will be commonly available (via slack).

This is the future ('directionally correct')

Whether you are a founder, engineer, or investor it is clear that this type of abstract problem solving will only become more prevalent as machine learning advances. Regardless of the risks, history has shown that people front-running major trends** have been overall successful, if only in understanding the risks more clearly than others for future ventures.

**This is not to say that companies started now will succeed. It could very well be too early yet.

It works perfectly as a human helper

While anecdotal, I have started leaving a tab with the GPT-3 playground open, just to feed it things I come across during the day. It will easily summarize emails and small documents, answer questions, or just provide some entertaining ideas. This will be a serious use case for OpenAI to pursue.

It has the hype

Hype is a real benefit. Everyone is talking about GPT-3, in a way that I have never seen in AI before. For press coverage or pitching technical users, is always easier to go with the flow than against it. Just be sure the product really uses GPT-3 rather than only slapping the label on.

So, should you build or invest?

It is simply too early to bank an entire startup or product line on the usage of GPT-3. In order to be comfortable with adding it as a critical path you should think through:

- How much it will cost. What are your unit costs? Unless each API call returns $1 I would wait until they release the pricing details in early September to get serious.

- Could end-users be exposed to toxic outputs? If the answer is yes, your startup will be a little harder. If nothing else, you will face increased scrutiny during the production review.

Exceptions:

- You are using it to build end-products, where one generation will lead to many sales. ex. Writing a book or movie script. Summarizing public documents. Creative works.

- You are building infrastructure products to support future use-cases. There is plenty of green space for picks and shovels businesses here.

My 2¢

Investors -

You should be taking all the NLP/GPT-3 meetings they can. Learn about this stuff early rather than later.

Founders -

You should think about how to build a business that is enhanced by GPT-3, but not dependent upon it.

I am very bullish on the API overall. I think the OpenAI team is working quickly, very responsive to feedback, and has users best intentions at heart. For their very first product, this has been quite the launch! Even with the risks present, I am currently making a few different apps myself (both fall into the exceptions above).

Building something or investing in GPT-3? Feel free to contact me at [email protected].